Unpacking the GenAI Robot Hype: Part 1 - A New Generation of Robot Hardware, powered by GenAI

March 21st, 2025 - Vijay Pradeep

Venture Fellow at Matter Venture Partners

(~15 Minutes to Read)

Series Introduction & Overview

We are witnessing a major shift in AI-powered robotics, as applications move from pre-programed, highly-repetitive tasks, to complex tasks requiring human-like intuition and reactiveness. This is enabling the next generation of robots to perform a broader set of labor-intensive work that is currently carried out by wage-earning blue-collar workers . Today’s robot automation market, worth $Bs, largely comprises specialized industrial automation. But, for the first time ever, by combining GenAI with custom hardware, robots have an opportunity to capture a larger portion of the global blue-collar labor market (worth $Ts). In particular, GenAI-powered robot arms and robots with arms, are poised to carry out jobs that span a variety of sectors and skill levels: fruit pickers, housekeepers, warehouse order pickers, plumbers, welders, and many more. Even capturing just a portion of a subcategory of this blue-collar market, such as the US’s $100B “Building Cleaning Workers” segment [1], could already be bigger than the entire current robot arm market, which is currently only valued around $20B.

The excitement around this shift is evident by the massive investment that has been pouring into the sector. For example:

Physical Intelligence raises $400mm in Nov 2024 - link

Figure AI is in talks to raise $1.5B - link

SoftBank is planning to invest $500M in Skild AI - link

NVidia is doubling down on “Physical AI” - link

China's Xpeng may invest up to $13.8 billion in humanoid robots - link

And the list goes on, with the investment excitement still rising.

This series unpacks how the audacious goals & promises of AI powered robots are suddenly so compelling and worth $Bs of investment, despite barely any revenue to show for it (yet). We’ll explore it in 3 parts:

Part 1 - A New Generation of Robot Hardware, powered by GenAI

Part 2 - Lower Marginal-Cost, Mass-Produced Robots, thanks to GenAI. (Coming Soon)

Part 3 - The Insatiable Need for Robot Training Data, driven by GenAI (Coming Soon)

[1] There are 3m “Building Cleaning Workers”, each with an average annual salary of ~$36k. Occupation code 37-2021, Bureau of Labor Statistics

Figure 1 - “Blue collar” work spans a variety of industries and skill sets, with almost all involving significant manual labor and requiring expertise across a variety of disparate tasks. General AI powered robots are poised to capture a major piece of these $Ts labor markets.

Part 1: A New Generation of Robot Hardware, powered by GenAI

Key Points

Robotics advancements have historically happened in waves, roughly every 20 years. We’re at the beginning of the next wave, powered by GenAI.

With the promise of GenAI, humanoids are suddenly now a reasonable form-factor. There are also many other non-humanoid form factors that can benefit from GenAI, along with other ‘beyond-human’ robot designs.

As with any new technology advancement, the initial breakthroughs and promises are only the beginning. There is still significant work that needs to be done to capture the benefits of Generative AI in robotics and build a mature ecosystem around it.

Software has driven the evolution of robots for decades

Robots are designed and built to perform meaningful tasks in the physical world. However, it’s the breakthroughs in software and computers behind these physical robot systems that have catalyzed the industry to rethink how they’re designed and built. Almost every 20 years, a breakthrough in software and computation has catalyzed the creation of an entirely new class of robot systems, with each new class also bringing a step change of increased system complexity. This has made successive generations of more advanced robots significantly more challenging to design, develop, and deploy. Thus, modern robotics companies have required bigger teams, larger investments, and collections of industry players & vendors to bring these systems into fruition, but every new generation of robots was also able to capture a larger portion of the addressable market.

Today’s breakthroughs in software and computation, specifically Generative AI, places the industry at the cusp of yet another new generation of robots. Designing & developing these Generative AI powered robots will require even larger teams and even more investment into the sector than previous generations, but they also come with the promise of tackling an even larger portion of the addressable market, possibly worth trillions of dollars.

Custom, Fully-Constrained Robots - (~1970s onward)

These 1-off custom robot systems operated in a fully-constrained environment. There was no need to sense the environment nor make adjustments based on the environment, so these robots were able to complete tasks simply by following pre-programmed motions, managed by very primitive computers and analog control systems. Even as basic as these were, they were able to complete a variety of repetitive tasks, including automotive welding, automotive spray painting, and material handling. However, these workcells were often custom & application specific, so every robot workcell needed to be designed from scratch and every robot needed to be custom-programmed, resulting in high hardware & software costs. Customers only purchased these robot systems when they knew that they were going to keep it running continuously for many years, and sometimes decades, making the overall market opportunity small.

Figure 2 - Automotive welding robots are the canonical example of fully-constrained automation, and are still prevalent today.

(Left) - Unimate welding robots at a Chrysler plant in Michigan - 1979 (Source: Autoevolution.com)

(Right) - Kuka welding robots at a Tesla Gigafactory in Texas - approx 2022 (Source: Tesla)

Despite the 40 year gap between photos, these two welding lines look awfully similar

Custom, Semi-Constrained Robots with Sensors - (~1990s onward)

In the 1980’s and 1990’s, microprocessors and desktop-sized PCs were becoming much more common and more powerful. This made it much easier to do calculations on-the-fly, so it now became reasonable for a robot to process some limited sensor information and make changes to the robot’s movement accordingly. This allowed robots to tackle new applications where some aspects of environmental or task uncertainty couldn’t be removed mechanically. For example, these robots are able to track seams while welding, apply a constant force while polishing, or even detect the exact location of a hole to insert a part. These tasks are of higher value than the previous repetitive tasks, but the addition of additional sensors and custom software increases the overall cost and complexity of these systems, thus keeping the market opportunity small once again.

Figure 3 - (Left) A laser-line projector & depth sensor enable this Abicor-Binzel workcell track a weld-seam (Source: Binzel-Abicor)

(Right) A 3D depth camera enables this Tekniker workcell to pick up chaotically placed parts (Source: MVTec)

Mass-Produced, Discriminative AI Powered Robots (2010s onward)

This decade sparked several key breakthroughs in discriminative AI, and more specifically, convolutional neural network (CNN) based object classification models:

GPUs accelerating neural networks by 70x (Andrew Ng, 2008)

Widely accepted datasets and metrics to evaluate algorithm accuracy (Fei-Fei Li’s Imagenet & ILSVRC challenge, since 2010)

Convolutional neural networks that were finally more than a novelty and could actually be used in real world use-cases for object detection (AlexNet in 2012 and YOLO in 2015)

These new CNN discriminative AI algorithms could now substitute the 10,000s of lines of carefully crafted, hand-written, sensor processing code, and instead feed millions of image pixels or other sensor data into neural networks trained on datasets. This also made robot sensor processing subsystems much less sensitive to environmental variations. Now, a door handle could be detected from both near and far, the ripeness of an apple could be ascertained regardless of the time of day or glare, and a robot pincher could pick up a screw regardless of its orientation. By effectively ‘solving’ these sensor processing subproblems, discriminative AI made it possible for a robot system to manage so much uncertainty in an environment and task that a robot workcell could be mass produced to meet the needs of an entire class of end-users: Mass produced trash sorters, fruit pickers, and online order grabbers suddenly became possible. This once again grew the market opportunity for robots, but the role of AI in these new robot systems was limited to very specific subproblems in the overall system (object classification, object detection, grasp prediction, etc). This limited the total customer base (and hence value) of each new robot system that was designed.

Figure 4 - (Left) A mass produced, truck loading workcell from Dexterity AI. While various trucks and boxes might have different shapes, weights, & sizes, the system is general enough to adapt to customer-specific environments, thus enabling the workcell to be mass produced. (Source: Dexterity

(Top Right): At AMP Robotics, a discriminative AI classification model is able to determine the material types for various refuse, and dynamically command a robot to grab and sort the specific items that need to be recycled (Source: Robohub)

(Bottom Right): Object detection & grasp location discriminative AI models enable RightHand Robotics piece-picking workcells to scale to handle 100s of different items without reconfiguration. (Source: Righthand Robotics)

Generative AI is the catalyst for a new generation of general purpose robots (late 2020s onward)

Generative AI was thrust into the limelight in 2022, with the launch of ChatGPT. For the first time ever, AI-powered systems were able to demonstrate near-human communication, reasoning, and decision making abilities. AI & modern-day software were no-longer limited to addressing narrowly defined calculations and math problems: these systems could generate reasonable responses to more open-ended requests and questions by drawing from previous examples and data. Transferring this idea to robotics, Generative AI enables end-to-end AI models for robots, where AI isn’t just limited to addressing specific sub-problems or calculations. Instead, sensor data is directly fed into the model, and one large model (or multiple cascading models) process these inputs to eventually generate robot outputs (i.e. motor commands). This enables robots to respond to even more diverse inputs & environments, and perform an even broader, general set of tasks.

GenAI has suddenly made humanoids a common design choice for general purpose robots

This shift towards a general-purpose robot software stack (e.g. end-to-end robotics GenAI models) has sparked a new wave in robot hardware design. By assuming that generative AI will yield robot software that knows how to perform a variety of tasks, robot hardware can now be designed to be physically general-purpose enough to perform a variety of tasks. And, the majority of blue-collar labor occurs in and requires humans to move through human-centric environments (stairs, elevators, etc), pick up human-scale objects (tools, cups, boxes, etc), and interact with other humans in the vicinity. Not surprisingly, the humanoid-form is very conducive to operating in this world built by humans, for humans. This is what has led to the sudden spike of new humanoid robot companies, with USD billions already invested. And, we’re already seeing the beginnings of these humanoid robots demonstrating that they are able to do work in these human environments. Figure’s humanoids are loading and removing car parts from machines in BMW’s Spartanburg South Carolina plant, Agility’s Digit robots are moving boxes in Amazon warehouses, and Tesla’s Optimus robots are serving drinks at events. All of these examples are still only pilot programs or demonstrations, operating with a mix of pre-scripted behaviors, human-based teleoperation, and some Generative AI. We don’t know how long it’ll take for the GenAI promise to make these robots truly general purpose (versus just looking general purpose). But, even if these demos and pilot programs are a bit hardcoded, it still doesn’t change the fact that this hardware is physically capable of performing these human-centric tasks in human environments.

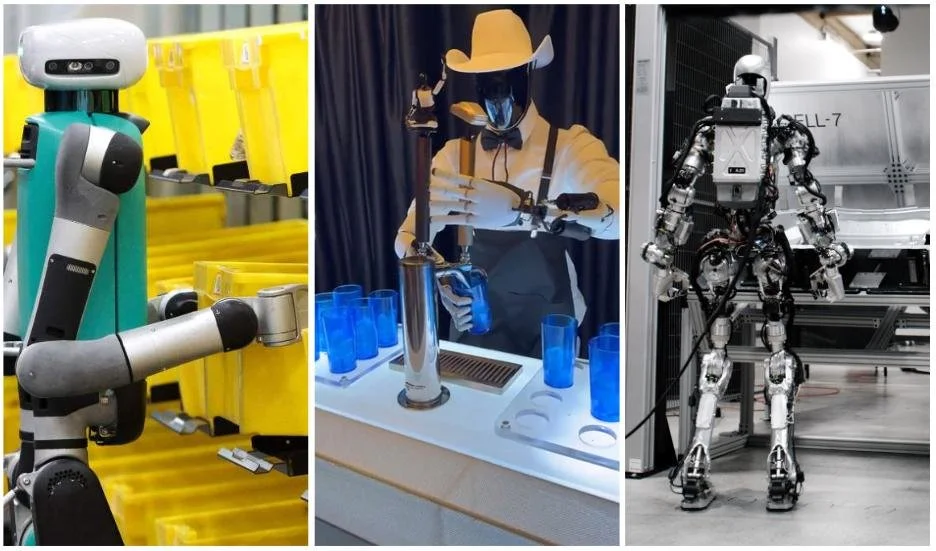

Figure 5 - Humanoids performing human-centric tasks in human-centric environments

(Left) Agility’s Digit robot carrying totes in an amazon warehouse (Source: TheTimes.com)

(Middle) Tesla’s Optimus robot mixing drinks. (Source: Reddit)

(Right) Figure’s humanoid loading automotive parts in a factory machine (Source: Techeblog.com)

But, getting to GenAI robots might be harder than the hype suggests

Generative AI may shift development work, instead of reducing it

While Generative AI in robotics applications can be incredibly powerful at solving multiple tasks (perception, scene understanding, grasping, etc), there could still be a large amount of engineering work required to build a system that actually does useful work. Something similar happened when moving to discriminative AI. While the 1000s of lines of human-written computer vision code were no longer needed (great!), there was additional engineering beyond the models themselves (e.g. handwritten sanity checks on the model outputs, data pipelines to curate and down-select training data, evaluation tools for validating model performance, APIs to interface the model with the hand-written inputs and outputs that interact with the model). We’ve already seen this happen with LLMs. Even with cutting edge models like OpenAI’s GPT series or Meta’s Llama models, there is still a significant engineering effort to build a good chatbot (e.g. ChatGPT) or code-helper co-pilot on top of these models. Similarly, even with robotics models like Physical Intelligence’s PI0 model being open-sourced or Google’s Gemini Robotics model, there is still quite a bit of engineering work that goes into building an entire robot product. The list is still evolving as the industry matures, but some of the work likely includes GPU-cluster model training infrastructure, cloud/edge hybrid inference models to balance processing speed with latency, data ingestion systems and data processing pipelines, software & hardware tools for learning from human demonstrations of tasks, remote teleoperation workflows for humans to take over when a robot gets confused, safety and error checking to catch and prevent errant model outputs, and the list goes on.

We can’t just duct-tape Generative AI onto existing robots

Simply adding a Generative AI on top of existing approaches seen in robot work cells forces customers to carry forward the ecosystem & technology baggage from the past 40 years of robot development, resulting in robots that are unnecessarily complex, expensive, and hard & slow to deploy. Even worse, these systems often end up relying on unnecessarily arcane APIs that actually make it harder to add AI into the system. Efficiently incorporating Generative AI into robots will require transforming how we build robots from an ecosystem, supply-chain, and technology perspective. This new generation of humanoid robot companies have to begin this journey of operating outside of the ecosystem, as they’re developing their own actuators, hardware, production workflows, and even factories, from the ground up. While this is a daunting task, the $Bs of investment definitely helps to facilitate this change.

Robots don’t have to look like humans to benefit from GenAI

All the media buzz and investment aimed at a glitzy future full of humanoids, misses some alternative applications for GenAI in robotics. Simply building a robot with the physical attributes of a human doesn’t make the robot as smart or capable as a human. That’s the irony of today’s humanoid robot boom. GenAI makes them more viable than ever, but this same technology also enables robots that are not human-shaped, often making them better suited for the task at hand.

And, even if the robot was as smart or capable as a human, there are lots of tasks that humans simply aren’t good at or humanoids would be too costly or clunky to make sense. The technology sector & media have gone so deep on humanoids that some investors are calling new humanoid startups YAHRCs (yet another humanoid robot company). Robots don’t have to look like humans to be helpful to humans. This new generation of Generative AI robots can manifest in a variety of form factors other than humanoids:

Simple Robots

GenAI robots could power robots with simple, but nonetheless capable, mechanics. Robots like Hello Robot’s Stretch 3 and Roborock’s Saros Z70 have the physical capabilities & reach to retrieve objects and tidy entire households for elderly & disabled, and people who simply are busy.

Figure 6 - Two low cost general purpose household robots. Even with limited dexterity and robot joints, these robots are still capable of meaningful household tasks.

(Left) - Hello Robot’s Stretch Home Robot (Source: TechCrunch, Feb 2024)

(Right) - Roborock’s Saros z70, which is able to tidy a household while vacuuming. (Source: Roborock)

Specialized Systems:

Many industrial tasks have very specific reach, dexterity, and payload requirements. A mobile welding robot powered by GenAI would enable it to perform a variety of welding tasks across an industrial facility. Or, a mobile industrial robot arm could help palletize boxes or grab items off shelving in a disorganized and cluttered warehouse.

Figure 7 - Two examples of very-specialized non-humanoids

(Left) - A mobile welding robot from RecGreen. With classical robot programming, it would be prohibitively expensive to precisely map an entire facility and hardcode all the specific welds that would need to be performed. GenAI removes these burdens, as the specific movements for each task can be determined autonomously, in-situ, based on the sensor information it has just received. (Source: RecGreen)

(Right) - Warehouse robots like Boston Dynamics’s Stretch robot often need to carry heavy or large loads, making a human form factor less than ideal. (Source: Boston Dynamics)

Beyond-Human Systems:

The human form factor has withstood the test of time, and has enabled humanity’s dominance on Earth for millenia. But, there’s also no reason to assume that this is the optimal form factor for future robots that are useful to humanity. There are already many examples of biomimicry that have allowed humans to leverage the evolutionary optimization of the form factors of other forms of life on earth to achieve useful tasks. For example, mountain goats have shown us that 4 legs are better than 2 in steep or rough terrain. Combining that with the fact that hands are hugely helpful in grabbing objects and manipulating tools, we end up with robots like Boston Dynamics’s Spot Arm. And, drawing inspiration from our human-created world, wheels have been a huge win for machine mobility (which has also led to roboticists endlessing debating legs versus wheels). It’s quite possible that we’ll see hybrid approaches like ‘wheeled legs’ that have no equivalent in nature, like the Unitree Go2-w. Also, many industrial robots have significantly larger reach and strength than humans and humanoids, which is necessary for some industrial tasks. For instance, Dexterity’s Mech has a 5.4m armspan and can lift 60kg.

We don’t even have to limit ourselves to what already exists. For instance, why only stop at one or two arms? More arms could help in throughput-limited situations or tasks that would have normally taken more than one human. And, when talking about arms, they don’t even need to be human-like. Also, robot arms have generally stuck to very specific geometries and degrees-of-freedom to simplify geometric calculations. Trajectory planning for a snake-like arm is extremely painful with classical robotics algorithms, due to a combinatorial explosion stemming from the system’s increasing dimensionality. But, this is much less an issue for GenAI, which natively operates in extremely high dimensionality. Obviously, building a snake-bot robot arm with 20 joints is going to be expensive. More likely, we’ll see arms and systems that at just a few more joints to venture beyond the usual 6 or 7 robot arm joints, to achieve challenging dexterity goals for tough applications.

Figure 8 - Examples of real and fictional beyond-human systems

(Top left) - Boston Dynamics’s Spot Quadruped with an additional arm (Source: Boston Dynamics)

(Top Middle) - Unitree’s Go2-W wheeled quadruped (Source: Unitree)

(Top Right) - Depiction of the Hindu god Vishnu, with four arms (Source: HD Asian Art

(Bottom Left) - Dexterity’s ‘Industrial Superhuman’ Mech truck loader (Source: Dexterity)

(Bottom Right) - Dr Octopus’s flexible snake arms, from SpiderMan 2 (Source: Fandom.com)

Part 1 - Closing Thoughts

Whether a humanoid or not, Generative AI is laying the foundation for new generation robots that look very different from most robots deployed today. These GenAI-powered robots also need to be built & designed differently, as they are meant to tackle a variety of non-specific tasks, controlled via a very different software stack than today’s robots. This massive shift gives the entire robotics ecosystem an opportunity for the first time in decades, to rethink how robots are built and deployed. This also creates an opportunity for robots to be mass produced at a scale that has never been seen before in robotics, creating the potential for massively reducing the cost of these systems (and thus increasing the size of the opportunity). We’ll dive deeper into how we get to mass produced robots in part 2 (Coming Soon)…